A monthly review of climate, energy, environmental, and political policy issues

Articles compiled by Jonathan DuHamel wryheat@cox.net

“Equal laws protecting equal rights; the best guarantee of loyalty and love of country.” —James Madison (1820)

“During the course of administration, and in order to disturb it, the artillery of the press has been levelled against us, charged with whatsoever its licentiousness could devise or dare. These abuses of an institution so important to freedom and science are deeply to be regretted, inasmuch as they tend to lessen its usefulness and to sap its safety.” —Thomas Jefferson (1805)

CLIMATE SCIENCE

Net Zero CO2 Emissions: A Damaging and Totally Unnecessary Goal (part 1)

by Roy W. Spencer, Ph. D.

The goal of reaching “Net Zero” global anthropogenic emissions of carbon dioxide sounds overwhelmingly difficult. While humanity continues producing CO2 at increasing rates, how can we ever reach the point where these emissions start to fall, let alone reach zero by 2050 or 2060?

What isn’t being discussed (as far as I can tell) is the fact that atmospheric CO2 levels will start to fall even while humanity is producing lots of CO2.

Why is that? The reason is due to something called the CO2 “sink rate”. It has been observed that the more CO2 there is in the atmosphere, the more quickly nature removes the excess. The NASA studies showing “global greening” in satellite imagery since the 1980s is evidence of that.

(Read more) (Read part 2) ☼

See also my post: The nonsense of net zero.

The Good News About Climate Change, Video, 6 min. (link) ☼ https://youtu.be/JqKB5PG0gZk

Climate Fact-Check March 2024 Edition

Editor’s note: This summary serves as a fact check on the biggest false claims made in the media in March, 2024. (Read more) ☼

Evidence Shows That Geological Features Play Major Role In Recent Ocean Heat Uptake

by James Edward Kamis

Many aspects of the climate change theory have been invalidated by a remarkable increase in our ocean’s temperature during the last twelve months. This sudden, anomalous, and inexplicable change in ocean surface temperature has left scientists who support the climate change theory baffled because it doesn’t support the conclusions of their current ocean climate models. Inexplicably, those advocating the validity of the climate change theory have stuck to their idea that all significant changes in ocean temperature are due to increases in human activity. Here we show that the only plausible explanation for this dramatic temperature increase in ocean temperature is massive pulses of heat emitted from ocean floor geological features. (Read more) ☼

The extraordinary climate events of 2022-24

by Javier Vinós

The unlikely volcano, the warmest year, and the collapse of the polar vortex.

The climate events of 2022-24 have been truly extraordinary. From an unlikely undersea volcanic eruption to the warmest year on record to the collapse of the polar vortex after three sudden stratospheric warming events. This rare convergence presents a unique learning opportunity for climatologists and climate aficionados alike, offering insights into a climate event that may not be repeated for hundreds or even thousands of years. (Read more) ☼

Our Weather Extremes Are Customary in History

by Ron Clutz

This report refutes the popular but mistaken belief that today’s weather extremes are more common and more intense because of climate change, by examining the history of extreme weather events over the past century or so. Drawing on newspaper archives, it presents multiple examples of past extremes that match or exceed anything experienced in the present day. That so many people are unaware of this fact shows that collective memories of extreme weather are short-lived. (Read more) ☼

Official Temperature Data Isn’t ‘Data’ At All

by H. Sterling Burnett

The Heartland Institute led the way in reporting on problems with the surface temperature record.

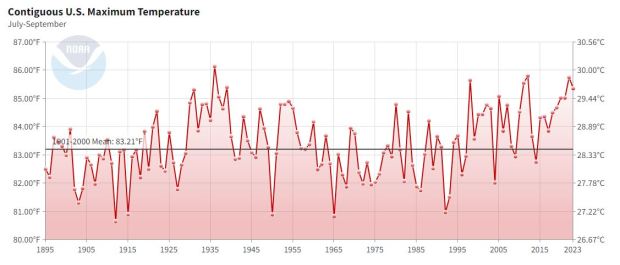

We have highlighted how the surface station record did not correspond to the temperatures recorded by global satellites and weather balloons, two alternative temperature data sources whose data sets closely track each other. Heartland has repeatedly exposed instances in both the United States and abroad where official agencies tamper with past temperature data at pristine stations, adjusting it to appear cooler than what was actually recorded, while adjusting recent temperatures upward. We were all over the adjustments made by corrupt NOAA scientists in 2015 before the Paris climate treaty negotiations—mixing data from unbiased ocean buoys with heat-biased temperature measurements taken from ships’ engine water intake inlets, which made it appear the ocean was suddenly warming faster than before.

Also, first, foremost, and most forcefully, we independently documented the serious problems with the official surface temperature record arising from the fact that the vast majority of temperature stations are poorly sited. Stations fail NOAA’s own standards for quality, unbiased stations in reporting temperatures skewed by the Urban Heat Island (UHI) effect.

We found stations located next to the exhaust fans of air conditioning units, surrounded by asphalt parking lots and roads, on blistering-hot rooftops, and near sidewalks and buildings that absorb and radiate heat….many “stations” allegedly “reporting” temperatures, don’t actually exist anymore, and haven’t for years. The government is just making up the data reported from many locations based on an averaging of temperatures recorded at other locations in the region. (Read more) ☼

Coastal Inundation: Rising Sea Levels Explained

by David Legates

SUMMARY: Sea level rise is an oft-cited result of anthropogenic climate change. The usual narrative is that sea levels rise due to the thermal expansion of seawater and the melting of ice caps, both due to the rise in air (and ocean) temperature caused by increasing greenhouse gas concentrations. However, the dynamics of why sea levels rise (or fall) relative to the coastal elevation (coastal inundation) are far more complex. This Special Report explains how and why coastal inundation occurs and provides a deeper insight into this complex issue. (Read f ull report) ☼

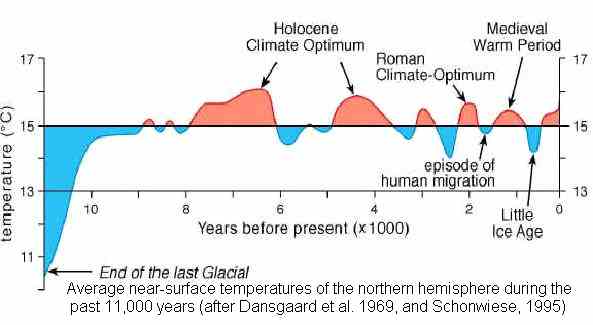

Studies Affirm Sea Levels Were 5 to 9 Meters Higher Than Today 7000-5000 Years Ago

by Kenneth Richard

Two studies, independently published, identify Mid-Holocene sea levels in northern Norway (north of the Arctic circle) as 7 to 9 meters higher than today before declining to the present (Balascio et al., 2024, Nielsen et al., 2024).

This region of the Arctic was warm enough to support human settlements and boat harbors during the Medieval Warm Period. As the climate deteriorated into the Little Ice Age cooling centuries after the Medieval warmth, the accompanying sea level fall led to abandoned residences, ships, and harbors. The seas had become too shallow to sail in. (Read more) ☼

Islands That Climate Alarmists Said Would Soon “Disappear” Due to Rising Sea Found to Have Grown in Size (link) ☼

New Report: Globally, Hurricanes Not Getting Worse Or More Frequent (link) ☼

Antarctica Is Colder, Icier Today Than At Any Time In 5,000 Years (link) ☼

Observation of the Earth shows that deforestation and urbanization cause three times more warming than CO2 (link) ☼

CLIMATE SCIENCE BACKGROUND:

by Jonathan DuHamel

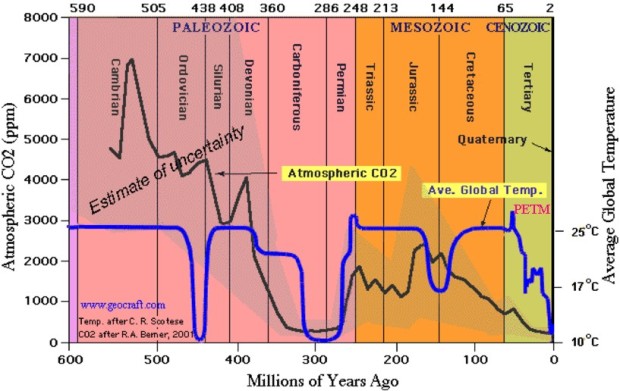

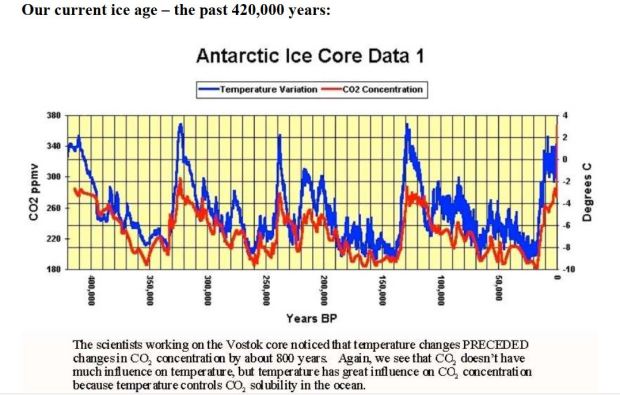

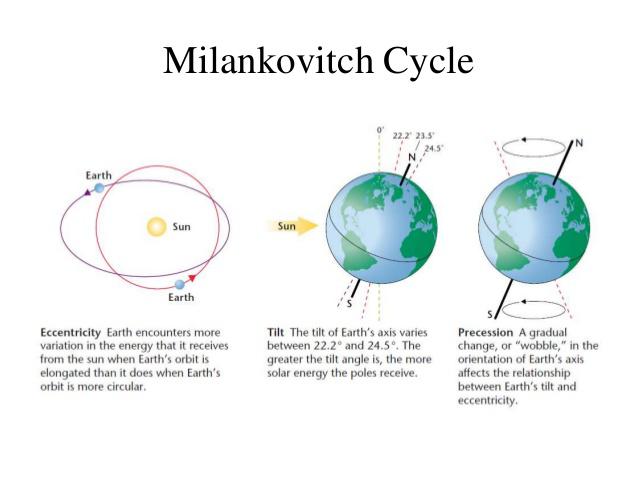

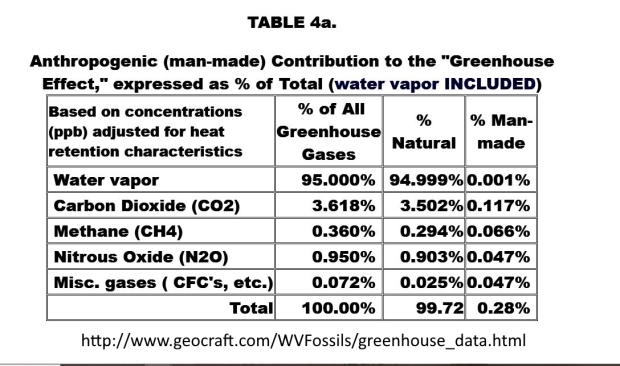

Geologic evidence shows that Earth’s climate has been in a constant state of flux for more than 4 billion years. Nothing we do can stop that. Much of current climate and energy policy is based upon the erroneous assumption that anthropogenic carbon dioxide emissions, which make up just 0.1% of total greenhouse gases, are responsible for “dangerous” global warming/climate change. There is no physical evidence to support that assumption. Man-made carbon dioxide emissions have no significant effect on global temperature/climate. In fact, when there is an apparent correlation between temperature and carbon dioxide, the level of carbon dioxide in the atmosphere has been shown to follow, not lead, changes in Earth’s temperature. All efforts to reduce emissions are futile with regard to climate change, but such efforts will impose massive economic harm to Western Nations. The “climate crisis” is a scam. U.N officials have admitted that their climate policy is about money and power and destroying capitalism, not about climate. By the way, like all planetary bodies, the earth loses heat through infrared radiation. Greenhouse gases interfere with (block) some of this heat loss. Greenhouse gases don’t warm the Earth, they slow the cooling. If there were no greenhouse gases, we would have freezing temperatures every night. Submarine volcanoes must be considered when evaluating the causes of climate change. Usually, these changes are regional, not global, however they may have global influences such as changing precipitation patterns as seen with the El Niño Southern Oscillation (ENSO).

For more on climate science, see my Wryheat Climate articles:

Geology and Climate Rocks Tell the Story

The Nonsense of “Net-Zero”

Climate Change in Perspective

A Review of the state of Climate Science

The Broken Greenhouse – Why Co2 Is a Minor Player in Global Climate

A Summary of Earth’s Climate History-a Geologist’s View

Problems with wind and solar generation of electricity – a review

The High Cost of Electricity from Wind and Solar Generation

The “Social Cost of Carbon” Scam Revisited

ATMOSPHERIC CO2: a boon for the biosphere

Carbon dioxide is necessary for life on Earth

Impact of the Paris Climate Accord and why Trump was right to drop it

Six Issues the Promoters of the Green New Deal Have Overlooked

Why reducing carbon dioxide emissions from burning fossil fuel will have no effect on climate ☼

See also: https://wattsupwiththat.com/2024/03/20/climate-the-movie-watch-here/

THE SCIENCE AND ENVIRONMENTAL POLICY PROJECT REPORTS

[SEPP provides a weekly roundup of news available every Monday as The Week That Was (PDF) and reprinted as a blog post here as Weekly Climate and EnergyNews Roundup. Click on date for full reports.]

APRIL 6:

Scope: This TWTW addresses the State of the Climate presented by Ole Humlum and the lack of knowledge of recent climate history presented by Ralph Alexander. Further, it briefly presents a bibliography for Climate: The Movie by Andy May, and further discusses the use of the linear no threshold model by organizations that abuse the scientific method by using it, ignoring that humans are living organisms capable of repairing themselves. Also presented are the absurd steps taken by those who claim weather events are caused by climate change and the coming shortage of US electricity generation that Washington ignores.

Humlum sums up with this:

“The global climate system is multifaceted, involving the sun, planets, atmosphere, oceans, land, geological processes, biological life, and complex interactions between them. Many components and their mutual coupling are still not fully understood or perhaps not even recognized. Believing that one minor constituent of the atmosphere (CO2) controls nearly all aspects of climate is naïve and entirely unrealistic. The global climate has remained in a quasi-stable condition within certain limits for millions of years, although with important variations playing out over periods ranging from years to centuries or more, but the global climate has never been in a fully stable state without change. Modern observations show that this behavior continues today; there is no evidence of a global climate crisis.”

To TWTW the two most important conclusions are:

“The perception that extreme weather events are increasing in frequency and severity is primarily a consequence of modern technology – the Internet and smart phones – which have revolutionized communication and made us much more aware of such disasters than we were 50 or 100 years ago. Before 21st-century electronics arrived, many weather extremes went unrecorded, especially in then more sparsely populated areas of the globe.”

And:

“Much of the fault for the widespread belief that weather extremes are becoming worse can be attributed to the mainstream media, eager to promote the latest climate scare. Constant repetition of a false belief can, over time, create the illusion of truth – a phenomenon well known to psychologists, and one exploited by propagandists. The falsehood can even become a ‘noble lie’ when exploited for political purposes. The failure by climate reporters to put today’s extreme weather events in a true historical perspective is contributing to the belief that weather extremes are on the rise when they are not.”

APRIL 13:

Scope: Ed Calabrese published another paper showing that the LNT model is based on a significant omission of evidence, an activity that is common in environmental science today. In their monthly temperature report Spencer, Christy, and the UAH science team demonstrate an integrity that is lacking in the temperature reports of NOAA and NASA-GISS. Most climate commentators ignore the dominant change to Earth from increased CO2 – global greening. Advocates of “ocean acidification” have misled the public by ignoring ocean chemistry. Effects of UN false claims about climate change are showing up in European courts. The US EPA continues to abuse its respected position by making absurd claims about chemicals.

Non-Science: Edward Calabrese and Paul Selbe had a paper published in the Archives of Toxicology demonstrating additional research efforts that were hidden in establishing the widely used and unworthy linear no-threshold model (LNT). The LNT model is widely used by government regulators, such as the US EPA, and some scientists to promote fear of chemicals without physical evidence.

Peak Greening? The dominant changing characteristic of Earth from increasing atmospheric carbon dioxide is the greening of Earth, with an increase in photosynthesis, including both land masses and the oceans.

APRIL 20:

Scope: Professors Lindzen, Happer, and Koonin have an essay asserting, with evidence, that carbon dioxide is essential for complex life on Earth and limiting emissions of greenhouse gases can result in mass starvation. Professor Howard Hayden discusses the failure of “climate scientists” to speak up when politicians make huge errors in presenting science. Independently, Jim Steele had a discussion showing the difference between the energy emitted by the surface of Earth and that going to space. Ron Clutz brings up a perceptive essay by Richard Lindzen on how academic and politicized science are deteriorating. Mark Mills discusses the “magical thinking” required to believe that the claimed energy transition will be easy or cheap. Note: “Net Zeroing” Fossil Fuels Will Cause Massive Human Starvation by Eliminating Nitrogen Fertilizer.

Click on “APRIL 20″ above to read more.

CLIMATE MADNESS

$100 Billion per Year Needed for US Carbon Removal [The demand for $100 billion per year, to fix a problem which only exists in the fevered imagination of broken computer models, is beyond parody.] (link) ☼

‘Absolute Genius’: How Three Alarmist Billionaires Bankrolled The Fake Climate Catastrophe (link) ☼

Study: Replacements For Plastic Products Increase Greenhouse Gas Emissions In Nearly All Cases

by Steven Hayward

A study in Environmental Science and Technology published in January has the goods, entitled “Replacing Plastics with Alternatives Is Worse for Greenhouse Gas Emissions in Most Cases.”

This article examines the greenhouse gas (GHG) emission impact of plastic products versus their alternatives. We assess 16 applications where plastics are used across five key sectors: packaging, building and construction, automotive, textiles, and consumer durables.

These sectors account for about 90% of the global plastic volume. Our results show that in 15 of the 16 applications a plastic product incurs fewer GHG emissions than their alternatives. In these applications, plastic products release 10% to 90% fewer emissions across the product life cycle. [It turns out paper shopping bags produce five times as much CO2 over their lifetime as plastic HDPE bags do]

Furthermore, in some applications, such as food packaging, no suitable alternatives to plastics exist. These results demonstrate that care must be taken when formulating policies or interventions to reduce plastic use so that we do not inadvertently drive a shift to nonplastic alternatives with higher GHG emissions. (Read more) ☼

Revolts because of stupid climate regulations:

Truckers challenge Biden administration over climate crackdown on electric big rigs (link)☼

The Fury Of European Farmers Is Terrifying The Elites (link) ☼

Thousands of Canadians gather nationwide to protest Trudeau’s carbon tax (link) ☼

Media Jump On Latest Lawfare Theory That Big Oil Are Climate ‘Mass Murderers’ (link) ☼

Analysis: Even The IPCC’s Latest Report Doesn’t Support Climate Activists’ Lies (link) ☼

ENERGY ISSUES

In Another Blow To Biden’s Green Schemes, New York Scraps Three Offshore Wind Projects (link) ☼

Twenty-Five State AGs Sue Biden EPA Over Economy-Crushing Emissions Mandates (link) ☼

When Politics and Physics Collide: The belief that mandates and massive subsidies can summon a world without fossil fuels is magical thinking. (link) ☼ The total direct and induced spending on the energy transition could easily exceed $5 trillion before a decade passes, or sooner, if advocates prevail.

Americans Face Rising Gas Prices Again

Overall, gas prices have risen about 50% since President Joe Biden took office. “Since the first day of his administration Joe Biden has waged a war on fossil fuels taking pride in denying drilling permits and land access for production,” Daniel Turner, executive director at the energy workers advocacy group, Power the Future…,. “Now those actions are catching up to us, but team Biden is thrilled: high gas prices will be used as a catalyst to force Americans into EVs against their will.” (Read more) ☼

Report: Biden Has Taken Over 200 Actions Against American Oil Since His Inauguration

by Bethany Blankley

President Joe Biden and his administration have taken over 200 actions against the U.S. oil and natural gas industry as energy prices have gone up, according to a new report. “President Biden and Democrats have a plan for American energy: make it harder to produce and more expensive to purchase,” the Institute for Energy Research states in a new report. (Read more) ☼

Study grades natural gas as best source for reliability, affordability and environmental impact

by Kevin Killough

A new study finds that natural gas is the most effective energy source meeting growing energy demands affordably and reliably, while balancing environmental and human impact.

The “Grading the Grid” study by the Mackinac Center for Public Policy, a pro-free market nonprofit, and Northwood University rates natural gas, coal, petroleum, nuclear, hydroelectric, wind, solar and geothermal generation sources on their reliability, environmental and human impact, cost, innovation and market feasibility.

Natural gas got an “A” grade, followed by nuclear, which got a “B+”. Wind and solar energy came in last, each receiving “F” grades, according to the study. (Read more) ☼

EVs, Wind, and Solar are Neither Reliable Nor Environmentally Friendly: Here’s Why

by Byron Soepyan

Wind and solar energy systems are not cheap, reliable or environmentally friendly, and EVs also prove to be dangerous, unreliable and expensive.

Various governments have made commitments to expand the use of electric vehicles (EVs) and alternative energy systems. The stated objectives include reducing pollution, improving human health and the environment, protecting the environment and providing reliable energy at lower costs. Among those jumping on this band wagon are the governments of the United States, Canada, Australia, New Zealand and the European Union, and the Governorate of Vatican City State.

Here’s the big question: Will adopting these technologies achieve these goals? To get an answer, we will examine what they require for operation, decommissioning and supporting infrastructure. (Read more) ☼

UK Electricity Rates Five Times Higher Than China’s Thanks To Net Zero

by Chris Morrison

The United Nations established the Intergovernmental Panel for Climate Change in 1988 and in 1995 the first Climate Change Conference of Parties (COP1) was held in Berlin.

In the nearly 30 years since COP conferences began, the U.K. has halved its CO2 emissions so that we now account for a mere 1% of the global total. But in this same time interval, the developing world has massively increased its CO2 emissions.

For example, China’s CO2 emissions have quadrupled and now account for 29% of the global total. India’s emissions have tripled and now account for 7% of the global total. Both countries are still increasing their CO2 emissions.

The problem is that ‘green’ technologies are not very good. Electric cars and renewable energy are more expensive and inferior in performance to their fossil fuel equivalents.

So as the developing world industrializes, it is using fossil fuel technology to keep its costs down. Typically people in the UK pay more than twice as much for electricity as they do in the USA, where shale gas has transformed the energy market, and more than five times as much as in China, where they are still building coal-fired power stations. (Read more) ☼

Renewables will destroy America’s lifestyle back to the pre-1800s – this is the Biden energy plan!

by Ronald Stein P.E.

Regardless of intermittent weather, the electrical grid is expected to deliver continuous and uninterrupted electricity no matter what the weather to support computers for hospitals, airports, offices, manufacturing, military sites, and telemetry, which all need a continuous, uninterrupted supply of electricity.

Yet, policymakers continue to subsidize wind turbines and solar panels (with taxpayers’ money) for the generation of electricity that DO NOT work most of the time.

I find it amusing that twenty-three states have adopted goals to move to 100 percent clean electricity by 2050. Of the six electrical generation methods, wind and solar cannot compete with hydro, nuclear, coal, or natural gas.

Interestingly, all the components of wind turbines and solar panels are also based on products made from fossil fuels. Thus, in a fossil-free society, we’re decaying back to the 1800s, as there will also be NO electricity. Life was short and hard for the common man just a couple of hundred years ago! (Read more) ☼

Destroying The Myth of Cheap Wind and Solar

by Isaac Orr And Mitch Rolling

Wind and solar advocates often cite a metric called the Levelized Cost of Energy (LCOE) to claim that these energy sources are cheaper than coal, natural gas, and nuclear power plants.

However, these claims, which are already tenuous due to rising wind and solar costs, ignore virtually all of the hidden real-world costs associated with building and operating wind turbines and solar panels while also keeping the grid reliable. (Read more) ☼

States Retreating From Green Energy As Costs Skyrocket, But Climate Policies Harder To Kill

by Kevin Killough

The Arizona Corporation Commission (ACC) recently took the unusual step of voting to pull back on the state’s renewable energy targets, over concerns they are too costly and produce few benefits. Most states are moving in the other direction, following California’s lead, but there are signs of some hesitation as the real costs of these policies are realized. (Read more) ☼

Biden’s Signature Climate Law Has a Major Achilles’ Heel — And Dems Are Making It Worse

by Nick Pope

President Joe Biden’s landmark climate bill is being held back by a lack of comprehensive permitting reform, the absence of which enables environmentalist lawsuits that impede green energy projects subsidized by the legislation.

The Inflation Reduction Act (IRA) contained hundreds of billions of dollars to subsidize green energy projects nationwide, but the bill did not include significant reform to the permitting process that would expedite construction timelines and insulate developments from environmental legal challenges. Unless Congressional Democrats can negotiate a permitting reform package with Republicans in an election year, these problems will continue to dog the IRA’s implementation, energy policy experts and stakeholders told the Daily Caller News Foundation. (Read more) ☼

Net Zero? China Dominates Surge In New Coal Power, Setting Record Capacity Globally

by Bloomberg News

Global coal-power capacity rose to a record last year, led by a surge in new plants in China and a slowdown in retirements around the world, according to a new report from Global Energy Monitor. The world’s coal fleet grew by 2% to 2,130 gigawatts, with China accounting for about two-thirds of the increase followed by Indonesia and India, according to the climate research firm. China also started construction on 70 gigawatts of new coal plants last year, nearly 20 times more than the rest of the world combined. (Read more) ☼

EV And AI Power Demands Will Only Fuel More Electric Rate Shocks (link) ☼

Expert’s EV Warning: ‘One Of The Biggest Energy Policy Blunders We’ve Ever Made’

(link) ☼

EVs, climate agenda are a national security threat used ‘to weaken us and ultimately destroy us,’ expert warns (link) ☼

Thousands of Solar Panels in Texas Destroyed by Hailstorm (link) ☼

200 Ways President Biden and the Democrats Have Made it Harder to Produce Oil & Gas

(link) ☼

Biden Admin Announces Massive Restrictions On Alaskan Oil Reserve And Hampers Key Mining Project In One Fell Swoop (link) ☼

The War On Cars

In a fit of self-loathing, the European Union has begun to destroy the economic engine that pays its bills. Some of this is well known, but some is not, and it will astonish you. (Read more) ☼

STATE OF THE UNION

Six Lessons for Keeping Your Country

by Ron Clutz

Jonathan Newman provides a brief overview of economic fundamentals for a free society in his article What are Mises’s Six Lessons? Ludwig von Mises’s Economic Policy: Thoughts for Today and Tomorrow has become quite popular recently. The Mises Book Store has sold out of its physical copies, and the PDF, which is available online for free, has seen over 50,000 downloads in the past few days. Read a summary of the six lessons here. ☼

ORGANIZED THEFT IN THE NAME OF GOVERNMENT – Biden’s Executive Order 14008 locks away 30% of U.S. land by 2030, under the pretext of protecting the planet’ (link) ☼

The Brainwashing of America

By Jeannie DeAngelis

America was once a nation of free-thinking individualists priding themselves on the constitutional idea that God ordained and endowed each person as an end unto himself with rights to think, act, believe, and behave according to personal thoughts, conscience, and individual interest. According to the Declaration of Independence, those “inalienable rights” include life, liberty and the pursuit of happiness. Therefore, because government’s power is derived from the consent of the free, the job of those running the country is to “secure,” not undermine those rights and freedoms.

Our nation’s Founders knew that the nature of individualism is what stimulates the kind of spirit that brings about the sort of advances America has achieved since its founding. Yet, by trusting humans to think, act, and live according to their beliefs, the framers left the door wide open for enemies of liberty to infiltrate the minds and hearts of the easily influenced. (Read more) ☼

The Big Lie Behind DEI (link) ☼ (Diversity, Equity and Inclusion )

11 ways Biden and his handlers are hell-bent on destroying America

from Fox News

1. Wipe out a 2,000-mile border.

2. Run up $35 trillion in national debt.

3. Appease or subsidize enemies like Iran and China.

4. In a multiracial democracy, redefine identity only as one’s tribal affiliation.

5. Recalibrate violent crime as understandable, cry-of-the-heart expressions of social justice.

6. Emasculate the military by using non-meritocratic standards of race, gender, and sexual orientation to determine promotion and commendation.

7. Reinvent the justice system to indict, bankrupt, convict, jail and eliminate political opponents.

8. Encourage the fusion of the bureaucratic state with the electronic media to form a powerful force for political audit, surveillance, censorship and coercion.

9. Make war on affordable gasoline and natural gas.

10. Marry late, but preferably not at all.

11. Turn world-class universities into indoctrination centers. (Read more) ☼

Biggest Corporate Welfare Scam of All Time

By far the biggest tax dodgers in the country are the wind and solar power industries.

by Stephen Moore

President Joe Biden keeps lecturing corporate America to “pay your fair share” of taxes. It turns out he’s right that some companies really are getting away scot-free from paying taxes.

But it isn’t Big Tech companies in Silicon Valley or the Wall Street financial company “fat cats” or big banks or Walmart. They pay billions in taxes.

The culprits here are the very companies that Biden is in bed with: green energy firms.

It turns out that despite all the promises over the past decade about how renewable energy is the future of power production in America, by far the biggest tax dodgers in the country are the wind and solar power industries. Over the past several decades, the green energy lobby — what I call the climate-change-industrial complex — isn’t paying its fair share. That’s because the vast majority of these companies pay nearly ZERO income taxes. (Read more) ☼

Electric vehicles are ‘direct wealth transfer’ from owners of gas-powered vehicles to EV owners, experts say. One expert said ‘all or nothing’ EV push is ‘one of the biggest energy policy blunders we’ve ever made’. (link) ☼

Biden’s Ill-Advised Alaska Oil Ban Means More Pain At Pump

by Diana Furchtgott-Roth

Earth Day is today, and once again President Joe Biden put the American people last and environmentalists first with Friday’s actions making swaths of Alaska off limits to the development of oil, natural gas, and critical minerals. America’s air is getting cleaner every year, and carbon emissions have declined by 1,000 million metric tons over the past 16 years. Even if America were to abandon all fossil fuels, global temperatures would only decline by two-tenths of 1 degree Celsius by 2100, according to government models. (Read more) ☼

Having Biden Declare A Climate Emergency Is A Crazy Idea

by David Blackmon

Bloomberg reported that unnamed officials inside the White House said the idea of declaring a climate emergency, first considered in 2021 and again in 2022, is once again under consideration. The only “emergency,” of course, is the president’s flagging approval ratings among impressionable young voters that threaten to derail his re-election chances. Declaring a climate emergency would arm the president with dictatorial powers to hamstring the domestic industry more than his regulators and hundreds of executive orders have already managed to do.

According to Bloomberg’s sources, actions being considered would include suspending offshore drilling, restricting exports of oil and LNG, and “throttling” the industry’s ability to transport its production via pipelines and rail. Given the industry’s crucial nature, it all sounds like a recipe for massive economic disaster. (Read more) ☼

The Kill Switch

by John Stossel

Soon the government might shut down your car. President Joe Biden’s new infrastructure gives bureaucrats that power. You probably didn’t hear about that because when media covered it, few mentioned the requirement that by 2026, every American car must “monitor” the driver, determine if he is impaired and, if so, “limit vehicle operation.” (Read more) ☼

PARTING THOUGHTS

“Everything is determined, the beginning as well as the end, by forces over which we have no control. It is determined for the insect, as well as for the star. Human beings, vegetables, or cosmic dust, we all dance to a mysterious tune, intoned in the distance by an invisible piper.” – Albert Einstein

“Unthinking respect for authority is the greatest enemy of truth.” – Albert Einstein

END