A monthly review of climate, energy, and environmental policy issues

STATE OF THE UNION

“I sometimes wonder if our leaders are very smart and just putting us on, or very stupid and mean it.” – Mark Twain

As we leave 2022, how could we characterize the happenings of the year? We lost energy independence, but gained many new pronouns; the president declared our southern border secure, but that security seemed to apply only to illegal entrants; and political “woke” rhetoric deemed that men could get pregnant, and let elementary school children witness “drag queen” performances etc.

Perhaps 2022 was not so different from the year described by Charles Dickens in the introductory paragraph of his novel: “A Tale of Two Cities” (1859):

“It was the best of times, it was the worst of times, it was the age of wisdom, it was the age of foolishness, it was the epoch of belief, it was the epoch of incredulity, it was the season of Light, it was the season of Darkness, it was the spring of hope, it was the winter of despair, we had everything before us, we had nothing before us, we were all going direct to Heaven, we were all going direct the other way—in short, the period was so far like the present period, that some of its noisiest authorities insisted on its being received, for good or for evil, in the superlative degree of comparison only.”

Thomas Jefferson’s words, written in 1822:

“Man, once surrendering his reason, has no remaining guard against absurdities the most monstrous, and like a ship without rudder, is the sport of every wind. With such persons, gullibility, which they call faith, takes the helm from the hand of reason and the mind becomes a wreck.” [This could apply to the current climate scam.]

“The worst ideas in Congress never die; they just get sneaked into unrelated bills.” – Elizabeth Nolan Brown

How to Destroy the United States

by Victor Davis Hanson

It would be hard to imagine any planned agenda to destroy America that would have been as injurious as what we already suffered the last two years. Read the ten points mentioned by Hanson. ☼

Who’s Really Facilitating America’s Border Crisis? Biden Isn’t Acting Alone

by Rob Bluey

Catholic Charities and other non-governmental organizations are working in concert with the Biden administration to facilitate the worst border crisis in American history, according to an investigation that examined the movement of 30,000 mobile devices in the United States.

The shocking report is a snapshot of the border crisis over the course of just one month and it shows how the mass resettlement of illegal aliens is impacting nearly every congressional district in America.

Using the movement patterns of anonymized cell phones, The Heritage Foundation investigation reveals how tens of thousands of illegal aliens are making their way to the U.S. interior thanks to the aid and comfort of a network of private organizations. (Read more) ☼

Waste, Fraud, and Abuse: Rand Paul Releases His 2022 Festivus Report

by Spencer Brown

U.S. Senator Rand Paul (R-KY) kept up his holiday tradition of releasing an annual “Festivus Report”, shining a light on the ways in which the federal government wasted Americans’ hard-earned tax dollars on frivolous, woke, and absurd projects during the year. In his 2022 issue of the Festivus Report, Paul logged $482,276,543,907 worth of wasteful spending, “including a steroid-induced hamster fight club, a study to see if kids love their pets, and a study of the romantic patterns of parrots.” Yes, really. “No matter how much money’s already been wasted, politicians keep demanding even more,” Paul noted in this year’s report. (Read more) ☼

CLIMATE ISSUES

The world is being subjected to a great scam concerning climate and energy. The purpose is to impose global governance by elitists.

Dr John Christy, distinguished Professor of Atmospheric Science and Director of the Earth System Science Center at the University of Alabama in Huntsville discusses climate.

Christy interview video: https://youtu.be/qJv1IPNZQao 49 minutes

Summary:

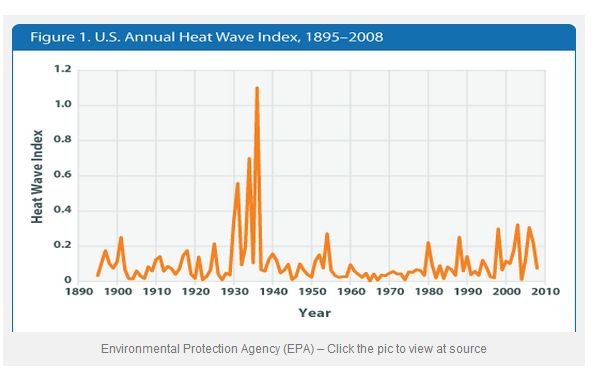

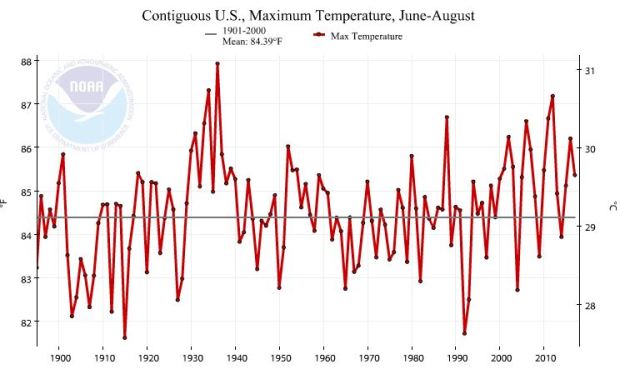

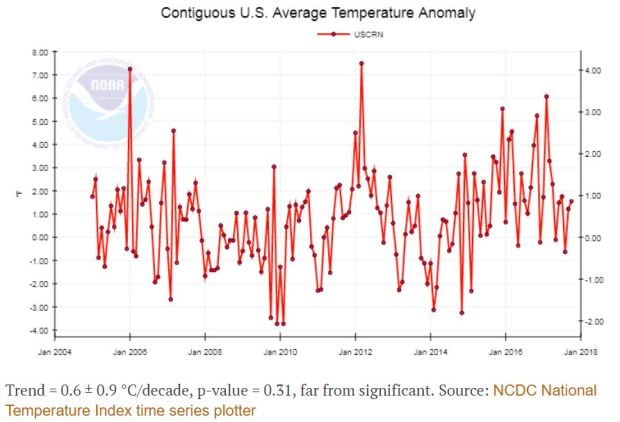

By using data sets built from scratch, based on satellite and other data, Christy, with other scientists including NASA scientist Roy Spencer, have been testing the theories generated by climate models to see how well they hold up to reality. Their findings? On average, the latest models for the deep layer of the atmosphere are warming about twice too fast, presenting a deeply flawed and unrealistic representation of the actual climate. 100% of climate models produce more warming than physical observations. There is no climate crisis. There has been no increase in extreme weather events during the past 140 years. According to satellite data, Earth has warmed 0.5°C during the past 40 years. None of that warming is reliably attributable to human activity. Carbon dioxide invigorates the biosphere. Energy production from fossil fuels has a much lower environmental footprint than wind and solar energy production.

Political policies have no effect on climate.☼

Misperception and amplification of climate risk

by Judith Curry

“Something frightening poses a perceived risk. Something dangerous poses a real risk.” – Swedish physician Hans Rosling et al.

This post is a follow up to my recent post Victims of the faux climate ‘crisis’. Part I: Children. The issue of psychological trauma of children is one that I am continuing to work on, to identify root causes and a way forward.

The theme of this particular post is how our perceptions of risk differ from the actual risk itself. Understanding this difference provides insights to understanding these fears, as well as providing insights into how these differences are manipulated by propagandists. (Read more) ☼

Climate and CO2 Hysteria Is Optional

by Ron Clutz

Those who oppose economically destructive “climate” policies – like those promoted by the Biden administration and at the recent United Nations COP27 conference – will continue to fail to stop the advance of these policies so long as they continue to accept the false claim that warming of the planet and carbon dioxide emissions are harmful.

They are not. On balance, global warming and CO2 emission are beneficial.

Before getting to why that is, however, it is crucial to understand why accepting the false climate claim is so harmful.

When the destructiveness of climate policies is shown, the response is that the policies nevertheless are necessary to address what President Biden refers to as the “existential threat” of global warming and increased CO2 emissions.

When it is noted that these climate policies will at most microscopically and insignificantly reduce temperatures and CO2 emissions, climate policy mandarins push for even more draconian policies.

The result has been that since the 1990s, climate policies have become increasingly destructive and wasteful. Even worse, their continued intensification appears unlikely to be stopped until the public and policymakers are persuaded that global warming and CO2 emissions are not harmful. As Margaret Thatcher famously said: “First you win the argument, then you win the vote.”

To win this argument, it is necessary to focus on the scientific facts. (Read more) ☼

The Top FIVE Climate Change LIES

by Laurence Fox

Laurence Fox breaks apart the lies repeatedly fed to the public and details the manipulation by the billionaire-funded lobby groups and activists.

Climate Lie Number One: Wind Power Is NINE Times CHEAPER Than Gas

Climate Lie Number Two: Island Countries Are SINKING Into The Sea

Climate Lie Number Three: Net Zero WILL Make YOUR Bills Cheaper

Climate Lie Number Four: Storms Are Getting MORE Frequent And MORE Intense

Climate Lie Number Five: Climate Change Is KILLING People

For details watch this video. 13 minutes. ☼

MIT climate scientist Dr. Richard Lindzen rejects ‘climate change’ as ‘a quasi-religious movement predicated on an absurd ‘scientific’ narrative’

Richard Lindzen’s new paper: An Assessment of the Conventional Global Warming Narrative

Some highlights noted by Climate Depot:

CO2 is a particularly ridiculous choice for a ‘pollutant.’ Its primary role is as a fertiliser for plant life. Currently, almost all plants are starved of CO2. Moreover, if we were to remove a bit more than 60% of current CO2, the consequences would be dire: namely death by starvation for all animal life. It would not likely lead to a particularly cold world since such a reduction would only amount to a couple of percent change in the radiative budget. After all, a 30% reduction of solar radiation about 2.5 billion years ago did not lead to an Earth much colder than it is today, as we earlier noted in connection with the Early Faint Sun Paradox.

The Earth’s climate has, indeed, undergone major variations, but these offer no evidence of a causal role for CO2. For the glaciation cycles of the past 700 thousand years, the proxy data from the Vostok ice cores shows that cooling precedes decreases in CO2 despite the very coarse temporal resolution (Jouzel et al.,1987, Gore, 2006). Higher temporal resolution is needed to show that warming preceded the increase in CO2 as well (Caillon et al, 2003). For earlier variations, there is no suggestion of any correlation with carbon dioxide at all, as shown in Figure 9a, a commonly presented reconstruction of CO2 levels and ‘temperature’ for the past 600 million years or so.

This all leaves us with a quasi-religious movement predicated on an absurd ‘scientific’ narrative. The policies invoked on behalf of this movement have led to the US hobbling its energy system (a process that has played a prominent role in causing current inflation), while lifting sanctions for Russia’s Nordstream 2 pipeline, which was designed to bypass the existing pipeline through the Ukraine used to supply Germany. It has caused much of the European Union to ban exploitation of shale gas and other sources of fossil fuel, thus leaving it with much higher energy costs, increased energy poverty, and dependence on Russia, thus markedly reducing its ability to oppose Mr Putin’s aggressions. …

Unless we wake up to the absurdity of the motivating narrative, this is likely only to be the beginning of the disasters that will follow from the current irrational demonization of CO2. Changing course will be far from a simple task. As President Eisenhower noted in his farewell address in 1961: The prospect of domination of the nation’s scholars by Federal employment, project allocations, and the power of money is ever present and is gravely to be regarded. Yet, in holding scientific research and discovery in respect, as we should, we must also be alert to the equal and opposite danger that public policy could itself become the captive of a scientific-technological elite. ☼

CLIMATE SCIENCE BACKGROUND:

Geologic evidence shows that Earth’s climate has been in a constant state of flux for more than 4 billion years. Nothing we do can stop that. Much of current climate and energy policy is based upon the erroneous assumption that anthropogenic carbon dioxide emissions, which make up just 0.1% of total greenhouse gases, are responsible for “dangerous” global warming/climate change. There is no physical evidence to support that assumption. Man-made carbon dioxide emissions have no significant effect on global temperature/climate. In fact, when there is an apparent correlation between temperature and carbon dioxide, the level of carbon dioxide in the atmosphere has been shown to follow, not lead, changes in Earth’s temperature. All efforts to reduce emissions are futile with regard to climate change, but such efforts will impose massive economic harm to Western Nations. The “climate crisis” is a scam. U.N officials have admitted that their climate policy is about money and power and destroying capitalism, not about climate. By the way, like all planetary bodies, the earth loses heat through infrared radiation. Greenhouse gases interfere with (block) some of this heat loss. Greenhouse gases don’t warm the Earth, they slow the cooling. If there were no greenhouse gases, we would have freezing temperatures every night.

For more on climate science, see my Wryheat Climate articles:

A Review of the state of Climate Science

The Broken Greenhouse – Why Co2 Is a Minor Player in Global Climate

A Summary of Earth’s Climate History-a Geologist’s View

Problems with wind and solar generation of electricity – a review

The High Cost of Electricity from Wind and Solar Generation

The “Social Cost of Carbon” Scam Revisited

ATMOSPHERIC CO2: a boon for the biosphere

Carbon dioxide is necessary for life on Earth

Impact of the Paris Climate Accord and why Trump was right to drop it

New study shows that carbon dioxide is responsible for only seven percent of the greenhouse effect

Six Issues the Promoters of the Green New Deal Have Overlooked

Why reducing carbon dioxide emissions from burning fossil fuel will have no effect on climate ☼

CLIMATE & ENERGY MADNESS

The climate movement is bizarre. . . . It’s taken control of all these elite institutions. The movement’s ideology is the official religion of the British government, including the British Conservative Party. It’s the official religion of the United Nations. It’s the official religion of the World Economic Forum. Climate activists have made the great reset, which is fundamentally about a transition to renewables for climate change, the dominant ideology of the global elites. . . . The whole mainstream news media and the global elites are basically part of this cult. The idea that the world is coming to an end is mainstream among journalists. – Michael Schellenberger

Demands for ‘climate reparations’ are laughable

by Dan Hannan, Washington Examiner

The demands for climate reparations from wealthy countries are so absurd, so unscientific, and so offensive to natural justice that it is difficult to know where the criticism should begin.

The argument is that, since countries that industrialized earlier produced a lot of carbon a hundred years ago, they now owe a debt to poorer states. Naturally, this argument appeals to assorted Marxists, anti-colonialists, and shakedown artists, and COP27 has been dominated by insolent demands for well-run states to pony up. (Read more) ☼

Why reparations now?

By Mark C. Ross

The absurdity of the concept of reparations for slavery, an institution that ended several generations ago, is beyond mind-boggling. But there’s a blatantly cynical reason for the sudden lurch in that direction: formerly reliable Black voters are now slipping away from the Democrats. This also explains Biden’s recently expressed profuse generosity towards the nations of sub-Saharan Africa. (Read more) ☼

Meanwhile back on the real Earth

OP ED Watch

If you’re looking for a real climate crisis, forget Sharm el-Sheik and look at the damage climate change policy is doing to real people. For instance “Brits are paying the highest electricity bills in the entire world”. Having narrowly edged out the Republic of Ireland. And by a remarkable coincidence, “UK faces biggest fall in living standards on record”. Not, arguably, the best time to try to give away billions to pay for bad weather on the other side of the world while continuing to hammer your own energy industry. (Read more) ☼

Connecticut Just Made Climate Change Studies Compulsory

by Eric Worrall

Starting next July, Connecticut will become one of the first states in America to mandate climate change studies across its public schools as part of its science curriculum. Currently, nearly 90% of public schools across Connecticut include climate change studies in their curriculums. However, by mandating it as part of state law from grades five to 12, climate education will effectively become protected from budget cuts and climate-denying political views at a time when education in the US has become a serious culture war battleground. (Read more) ☼

EVs

Swiss look to ban use of electric cars over the winter to save energy (Link)

The Muddled Reality Of Electric Cars

by Bjorn Lomborg

Climate activists and politicians constantly tell us electric cars are cleaner, cheaper, and better. Germany, the U.K., and Japan, among other countries, will even prohibit the sale of new gas and diesel cars within a decade or two.

But if electric cars are really so good, why do we need to ban the alternatives? And subsidize electrics to the tune of $30 billion per year?

The reality is far more muddled than the boosters of electric cars would have you believe. Carbon emissions from an electric car depend on whether it is recharged with clean or coal power.

Moreover, battery manufacturing requires lots of energy, which today is mostly produced with coal in China. That is why the International Energy Agency (IEA) estimates that an electric car using the global average mix of power sources over its lifetime will still emit about half as much CO2 as a gas car, this will reduce global temperatures by only 0.0001°C by 2100. (Read more) ☼

The Mirage of Electric Vehicles

by Willis Eschenbach

For those who think that electric vehicles make a difference … think again.

The Department of Energy’s Argonne National Lab has just released a study showing that in 2021, US privately-owned plugin hybrid electric vehicles (PHEVs) and electric vehicles (EVs) “saved about 690 million gallons of gasoline.” (That’s about 4 days worth of gas). (Read more) ☼

The Elites’ War On Cars

by I & I Editorial Board

No human invention has expanded liberty like the automobile. That’s one of the reasons that the Western ruling class wants to end private ownership of cars. The other reason is just as insidious. The elites’ crusade against the automobile is also motivated by the same reason they’re waging a war on food. They are convinced Earth is running out of resources and they want to hoard as much as they are able for themselves. The most efficient method to guarantee that their bellies remain always full, and the tanks of their jets, yachts, limousines, and luxury cars are filled with fossil fuels, is to restrict consumption by those “common people” who make up the middle and lower classes. (Read more) ☼

ENERGY ISSUES

Will Nuclear Fusion Power Save Us?

by Dr. David Whitehouse

“Nuclear fusion breakthrough,” are the world’s headlines today. Eventually we will have free, pollution-free energy. No CO2 emissions, we will be saved. I have lived with the promise of nuclear fusion all my life and it has always been decades away. It’s become something of a bad joke amongst the science community that fusion is always decades away.

Nuclear fusion liberates energy by combining light atoms – isotopes of hydrogen – rather than by using the radioactive decay of large atoms such as uranium and plutonium – nuclear fission. It could have many advantages; the reaction can be switched off (not possible with fission), it uses water as a fuel and produces very little waste. The question is how do you fuse atoms? (Read more to learn the pros and cons) ☼

‘Net zero’ is a lie, admits the utility industry and its regulators

by Mark Morano

[“net zero” means producing electricity with no carbon dioxide emissions.]

A new report from the Electric Power Research Institute (EPRI) buries net zero. Investment managers, public traded companies, regulators, politicians and more who have so far talked very loosely about net zero should now be on notice that they are talking about pure fantasy, if not falsehood. For investment managers and public-traded companies net zero is false and misleading and should have legal ramifications. (Read more) ☼

The Energy Storage Conundrum

by Francis Menton

An electrical grid powered mostly by intermittent generators like wind and sun requires full backup from some source; and if that source is to be stored energy, the amounts of storage required are truly staggering. When you do the simple arithmetic to calculate the storage requirements and the likely costs, it becomes obvious that the entire project is completely impractical and unaffordable. The activists and politicians pushing us toward this new energy system of wind/solar/storage are either being intentionally deceptive or totally incompetent.

(Read full paper, 32 pages) ☼

Energy Storage Report: Hydrogen as An Alternative to Batteries

by Francis Menton

At first blush, hydrogen may seem to offer the obvious solution to the most difficult issues of energy storage for backing up intermittent renewable generation. In particular, the seasonal patterns of generation from wind and sun require a storage solution that can receive excess power production gradually for months in a row, and then discharge the stored energy over the course of as long as a year. No existing battery technology can do anything like that, largely because most of the stored energy will simply dissipate if it is left in a battery for a year before being called upon. But if you can make hydrogen from some source, you can store it somewhere for a year or even longer without significant loss. Problem solved!

Well, there must be some problem with hydrogen, or otherwise people would already be using it extensively. And indeed, the problems with hydrogen, while different from those of battery storage, are nevertheless equivalently huge. Mostly, to produce large amounts of hydrogen without generating the very greenhouse gas emissions you are seeking to avoid, turns out to be enormously costly. And then, once you have the hydrogen, distributing it and handling it are very challenging. (Read more) ☼

Save America’s grid!

By David Wojick

It is no secret that America’s electric power grid is becoming unreliable. The secret is whose fault it is. Not knowing who to blame makes it hard to fix.

If I could get a show of hands I bet that almost no one has heard of the North American Electric Reliability Corporation. They are called NERC which rhymes with jerk. They are a private corporation endowed with federal authority to keep America’s lights on. NERC makes and enforces the regulations governing grid reliability in America. The looming threat of numerous blackouts is their fault. They have obviously failed to maintain reliability, which is their specific mission. (Read more) ☼

How Climate Activists Have Increased CO2 Emissions By Vilifying Nuclear

by Leah Barkoukis

Climate activists who have successfully vilified nuclear energy, leading to plant closures around the Western world, may be surprised to learn that their efforts have actually increased greenhouse gas emissions, according to a report from The Breakthrough Institute, an environmental research center. As nuclear proponent Michael Shellenberger has explained, the true reason climate activists oppose nuclear energy is that it means renewables are unnecessary. (Read more) ☼

Power Grids Being Ruined By Preferential Treatment Of Unreliable Electricity

by Alex Epstein

Today’s grids are being ruined by systemic preferences for unreliable electricity:

1) no price penalty for being unreliable

2) huge subsidies for unreliables

3) mandates for unreliables

Congress should end these now.

Policy solution: End all mandates for unreliable solar and wind

These mandates, which require areas to use solar or wind and shut down reliable fossil fuels and nuclear, regardless of the impact on cost and reliability, should be ended at every level: national, state, and local.

Summary: America can stop the decline of our grid and move toward low-cost, reliable, and cleaner electricity by ending all preferences for unreliable electricity:

1. Require tech-neutral reliability standards

2. End all solar and wind subsidies

3. End all solar and wind mandates (Read full report) ☼

Biden Admin Prepares To Kill Alaska Mine, Hobbling Green Agenda And Costing State Billions In Potential Revenues

by Jack Mcevoy

The Environmental Protection Agency (EPA) recommended prohibiting the operators of Southwestern Alaska’s Pebble Mine from disposing of waste material in the nearby Bristol Bay, a regulation that would prevent the mine from opening. However, the mine could generate billions in revenue by producing minerals that are crucial in supporting the Biden administration’s “clean energy transition.”

The EPA said Pebble Mine’s potential waste discharges would threaten nearby sockeye salmon fisheries by polluting the water and preventing the fish from spawning and reproducing, according to an agency proposal released Thursday. Despite this, the mine would extract about 1.5 billion tons of copper, molybdenum as well as other critical minerals and could deliver more than $8 billion to Southwestern Alaska, according to a report published by Northern Dynasty Minerals, the mine’s owner. The U.S. Army Corps of Engineers found that Pebble Mine “would not be expected to have a measurable effect on fish numbers and result in long-term changes to the health of the commercial fisheries in Bristol Bay. The project could generate an estimated $1.7 billion in state tax revenue and $1.4 billion in federal taxes as the operator will pay both governments royalties in exchange for the rights to extract precious metals over a 20-year period, according to the report. The mine also sits on more than 107 million ounces of gold. (Read more) ☼

“There is but one straight course, and that is to seek truth and pursue it steadily.” —George Washington (1795)

END